Yiqing Xu

National University of Singapore. yiqing[dot]xu[at]u[dot]nus[dot]edu

NUS School of Computing, COM1, 13, Computing Dr

Singapore 117417

I’m Yiqing Xu, a CS Ph.D. candidate at the National University of Singapore, advised by Prof. David Hsu. Previously, I obtained double degrees in Computer Science and Applied Mathematics from NUS.

I was a visiting Ph.D. student at MIT CSAIL, advised by Prof. Leslie Kaelbling and Prof. Tomás Lozano-Pérez from September 2023 to February 2024.

research highlights

My research focuses on translating human objectives into signals for robotic optimization. I develop compositional and hierachical structures as intermediate representations and design reward learning algorithms to better align robotic agents with human goals, especially when expert data is scarce or under-specified.

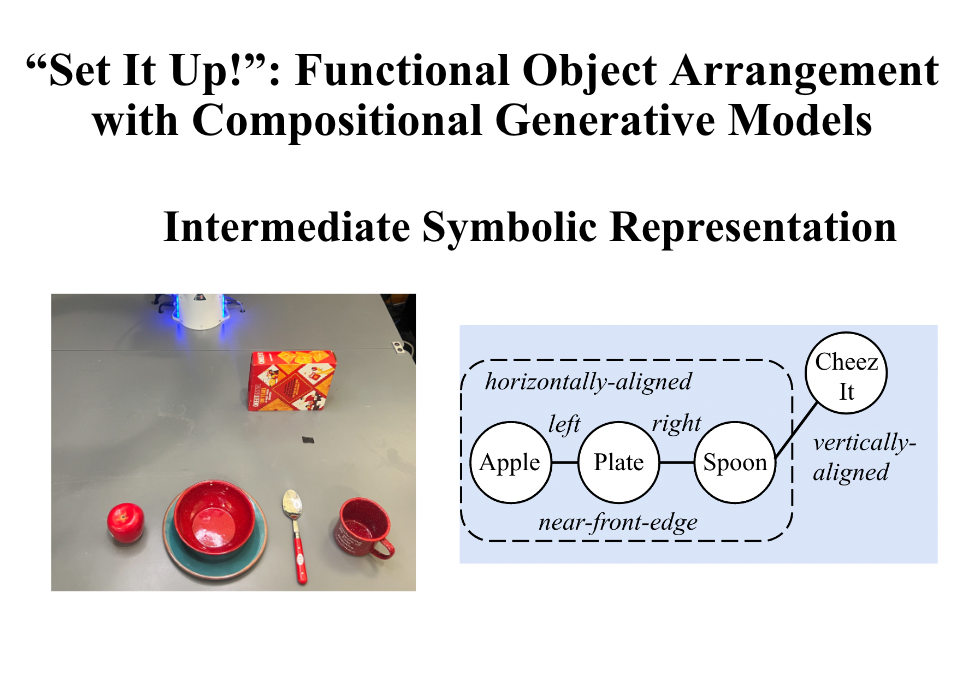

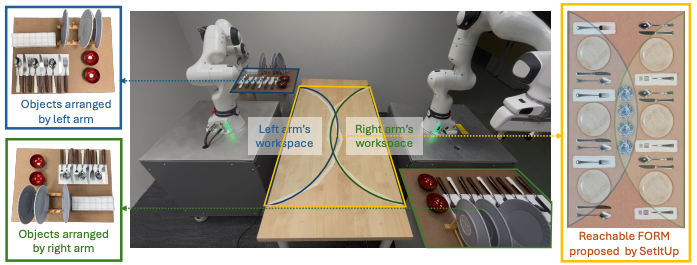

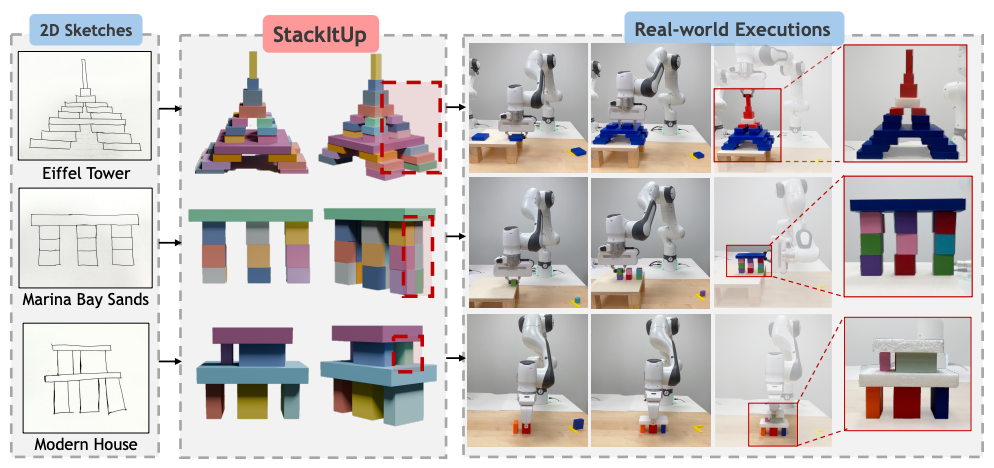

In my latest works, “Set It Up” (IJRR) and “Stack It Up” (CoRL), I explore how robots can act on human goals conveyed through ambiguous but intuitive inputs — like language commands or freehand sketches. Both works share a neuro-symbolic architecture that maps these inputs into abstract relation graphs, then grounds them into feasible physical configurations via compositional diffusion models. This approach preserves task structure, supports generalization, and learns from surprisingly few demonstrations by reusing local relational priors.

I’m excited to extend this framework in two directions. First, toward flexible skill chaining from mixed-modality input — combining coarse, abstract instructions with precise but partial demonstrations to infer symbolic task skeletons and modular reward functions that can be composed and optimized jointly. Second, toward interactive multi-modal goal specification, where robots engage with users via language, gaze, and motion to resolve ambiguity through active dialogue and inference. Across both directions, the goal remains the same: to make goal specification more expressive, adaptable, and aligned with how humans actually communicate intent.

If you’d like to chat more, feel free to email me!

selected publications

-

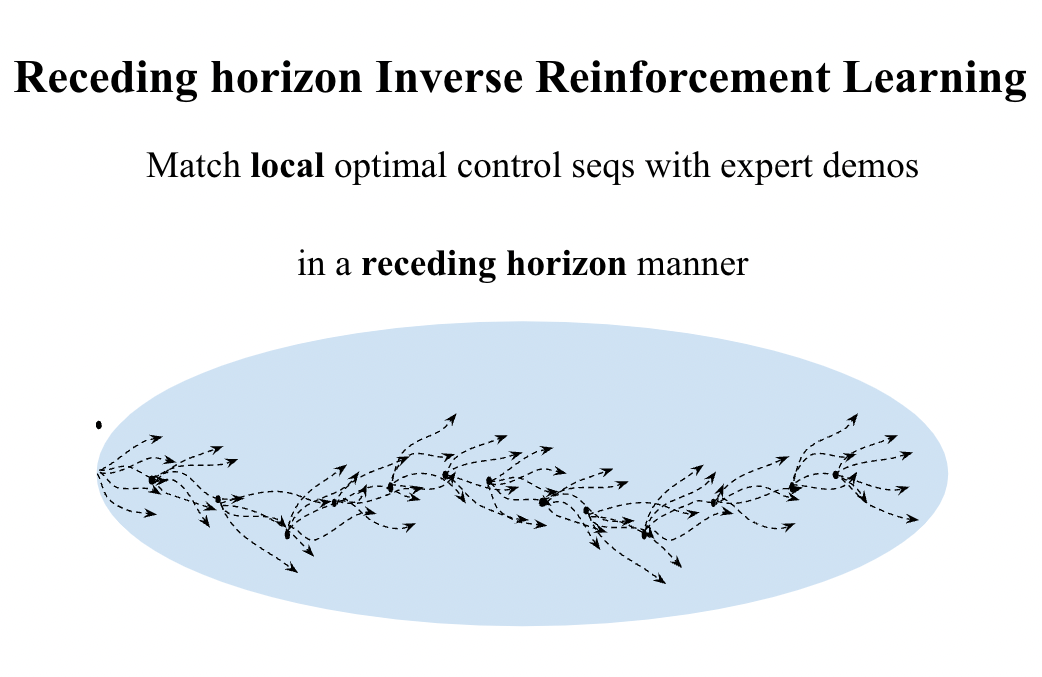

Receding Horizon Inverse Reinforcement LearningIn Advances in Neural Information Processing Systems (NeurIPS), 2022

Receding Horizon Inverse Reinforcement LearningIn Advances in Neural Information Processing Systems (NeurIPS), 2022 -

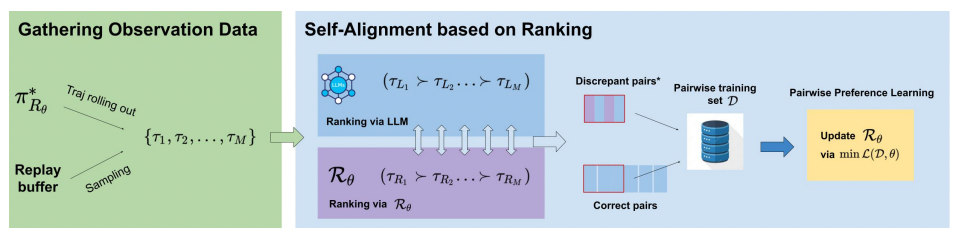

Learning Reward for Physical Skills using Large Language ModelIn Conference on Robot Learning (CoRL), LangRob workshop, 2023

Learning Reward for Physical Skills using Large Language ModelIn Conference on Robot Learning (CoRL), LangRob workshop, 2023 -

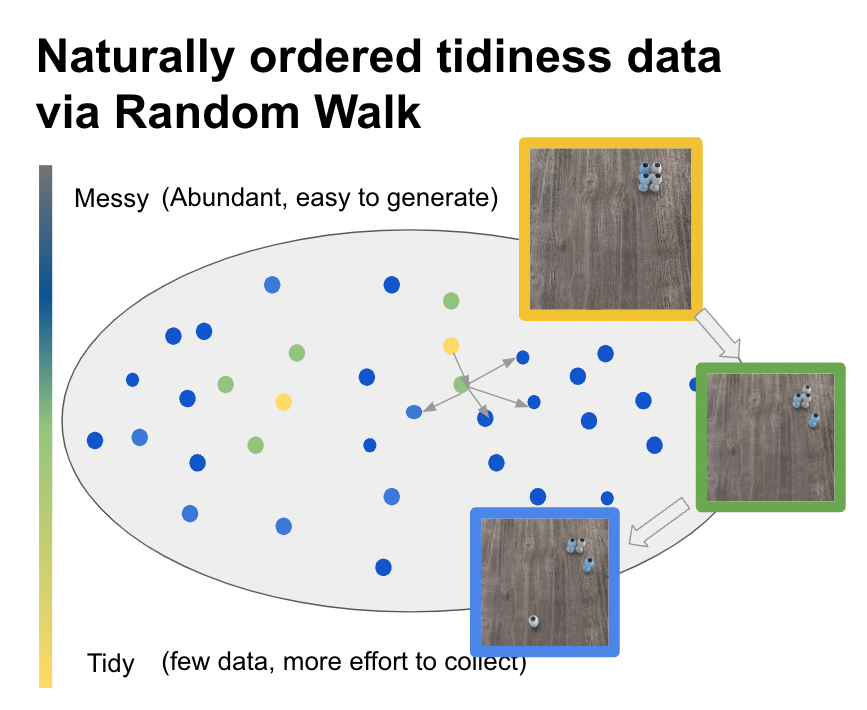

"Tidy Up the Table": Grounding Common-sense Objective for Tabletop Object RearrangementIn Robotics: Science and Systems (RSS), LangRob workshop, 2023

"Tidy Up the Table": Grounding Common-sense Objective for Tabletop Object RearrangementIn Robotics: Science and Systems (RSS), LangRob workshop, 2023 -

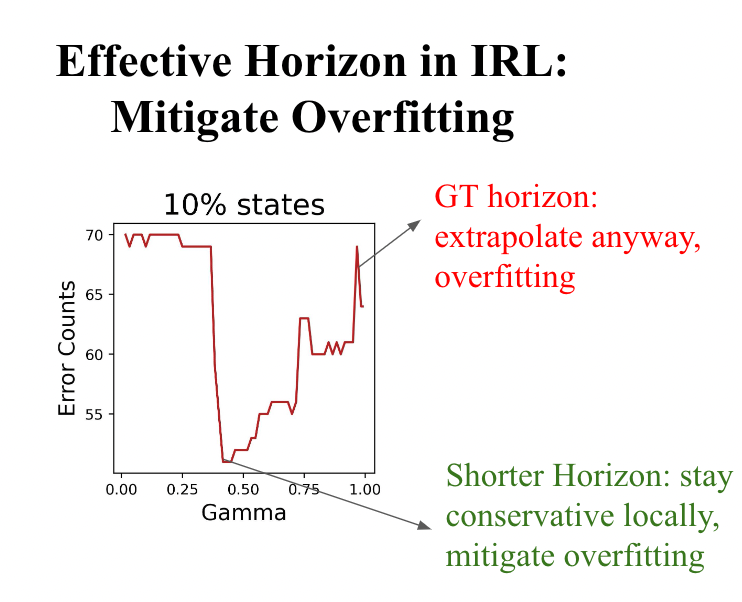

On the Effective Horizon of Inverse Reinforcement LearningIn Proceedings of the International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2025

On the Effective Horizon of Inverse Reinforcement LearningIn Proceedings of the International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2025 -

"Set It Up!": Functional Object Arrangement with Compositional Generative Models (Conference Version)In Robotics: Science and Systems (RSS), 2024

"Set It Up!": Functional Object Arrangement with Compositional Generative Models (Conference Version)In Robotics: Science and Systems (RSS), 2024 -

“Set It Up": Functional Object Arrangement with Compositional Generative Models (Journal Version)The International Journal of Robotics Research (IJRR), 2025

“Set It Up": Functional Object Arrangement with Compositional Generative Models (Journal Version)The International Journal of Robotics Research (IJRR), 2025 -

“Stack It Up!": 3D Stable Structure Generation from 2D Hand-drawn SketchIn Proceedings of the Conference on Robot Learning (CoRL), 2025

“Stack It Up!": 3D Stable Structure Generation from 2D Hand-drawn SketchIn Proceedings of the Conference on Robot Learning (CoRL), 2025